Abstract

Highly available and scalable web hosting can be a complex and expensive proposition. Traditional scalable web architectures have not only needed to implement complex solutions to ensure high levels of reliability, but they have also required an accurate forecast of traffic to provide a high level of customer service. Dense peak traffic periods and wild swings in traffic patterns result in low utilization rates of expensive hardware. This yields high operating costs to maintain idle hardware, and an inefficient use of capital for underused hardware.

Amazon Web Services (AWS) provides a reliable, scalable, secure, and highly performing infrastructure for the most demanding web applications. This infrastructure matches IT costs with customer traffic patterns in real time.

This whitepaper is for IT managers and system architects who look to the cloud to help them achieve the scalability to meet their on-demand computing needs.

An Overview of Traditional Web Hosting

Scalable web hosting is a well-known problem space. Figure 1 depicts a traditional web hosting architecture that implements a common three-tier web application model. In this model, the architecture is separated into presentation, application, and persistence layers. Scalability is provided by adding hosts at these layers. The architecture also has built-in performance, failover, and availability features. The traditional web hosting architecture is easily ported to the AWS Cloud with only a few modifications.

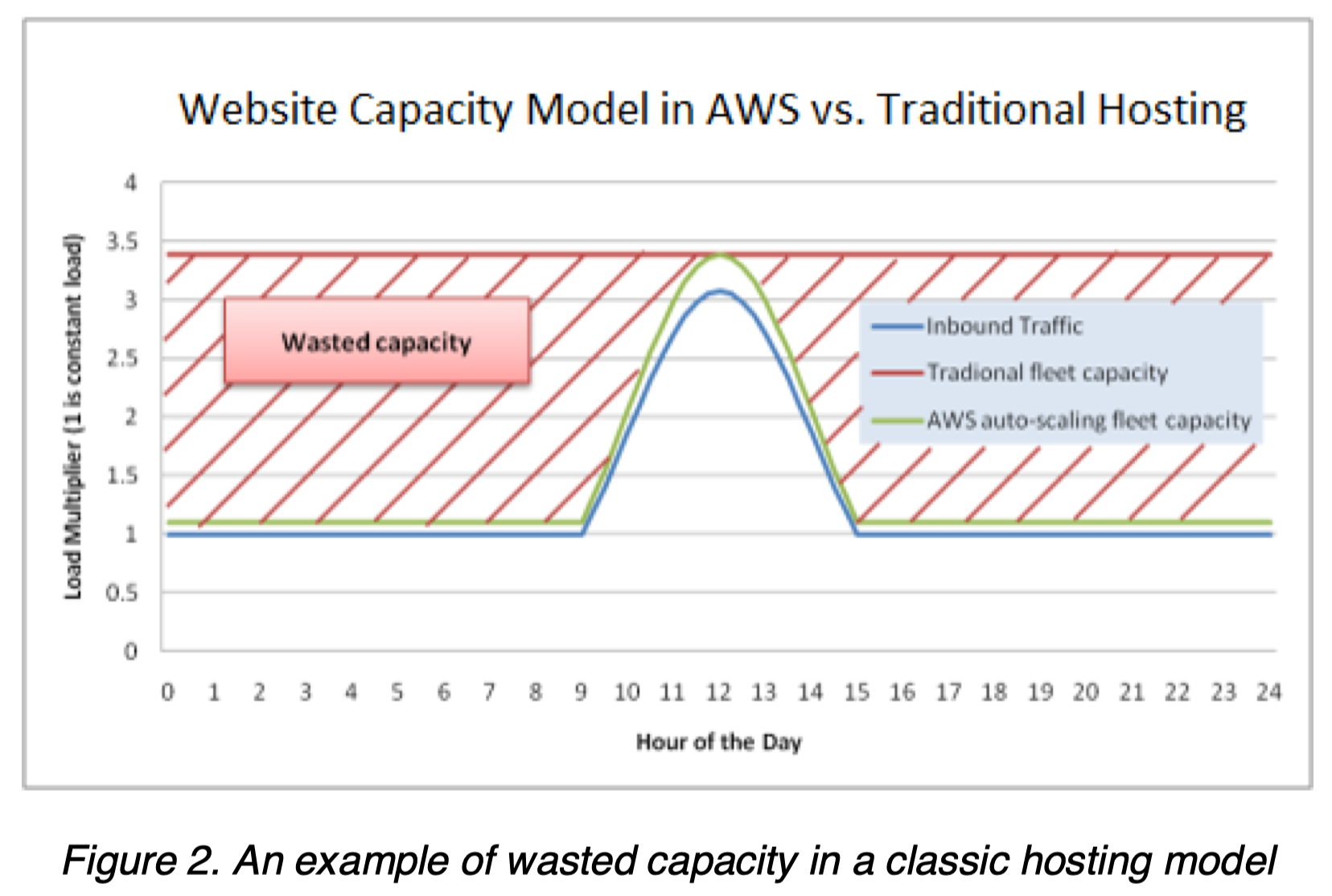

For example, the following graph shows a web application with a usage peak from 9AM to 3PM and less usage for the remainder of the day. An automatic scaling approach based on actual traffic trends, which provisions resources only when needed, would result in less wasted capacity and a greater than 50 percent reduction in cost.

In the following sections, we look at why and how such an architecture should be and could be deployed in the AWS Cloud.

Web Application Hosting in the Cloud Using AWS

The first question that you should ask concerns the value of moving a classic web application hosting solution into the AWS Cloud. If you decide that the cloud is right for you, you’ll need a suitable architecture. This section helps you evaluate an AWS Cloud solution. It compares deploying your web application in the cloud to an on-premises deployment, presents an AWS Cloud architecture for hosting your application, and discusses the key components of this solution.

How AWS Can Solve Common Web Application Hosting Issues

If you’re responsible for running a web application, you face a variety of infrastructure and architectural issues for which AWS can provide seamless and cost-effective solutions. The following are just some of the benefits of using AWS over a traditional hosting model.

A Cost-Effective Alternative to Oversized Fleets Needed to Handle Peaks

In the traditional hosting model, you have to provision servers to handle peak capacity. Unused cycles are wasted outside of peak periods. Web applications hosted by AWS can leverage on-demand provisioning of additional servers, so you can constantly adjust capacity and costs to actual traffic patterns.

For example, the following graph shows a web application with a usage peak from 9AM to 3PM and less usage for the remainder of the day. An automatic scaling approach based on actual traffic trends, which provisions resources only when needed, would result in less wasted capacity and a greater than 50 percent reduction in cost.

A Scalable Solution to Handling Unexpected Traffic Peaks

An even more dire consequence of the slow provisioning associated with a traditional hosting model is the inability to respond in time to unexpected traffic spikes. There are many stories about web applications going down because of an unexpected spike in traffic after the site is mentioned in the popular media. The same on-demand capability that helps web applications scale to match regular traffic spikes can also handle an unexpected load. New hosts can be launched and ready in a matter of minutes, and they can be taken offline just as quickly when traffic returns to normal.

An On-Demand Solution for Test, Load, Beta, and Preproduction Environments

The hardware costs of building out a traditional hosting environment for a production web application don’t stop with the production fleet. Quite often, you need to create preproduction, beta, and testing fleets to ensure the quality of the web application at each stage of the development lifecycle. While you can make various optimizations to ensure the highest possible use of this testing hardware, these parallel fleets are not always used optimally: a lot of expensive hardware sits unused for long periods of time.

In the AWS Cloud, you can provision testing fleets as you need them. Additionally, you can simulate user traffic on the AWS Cloud during load testing. You can also use these parallel fleets as a staging environment for a new production release. This enables quick switchover from current production to a new application version with little or no service outages.

An AWS Cloud Architecture for Web Hosting

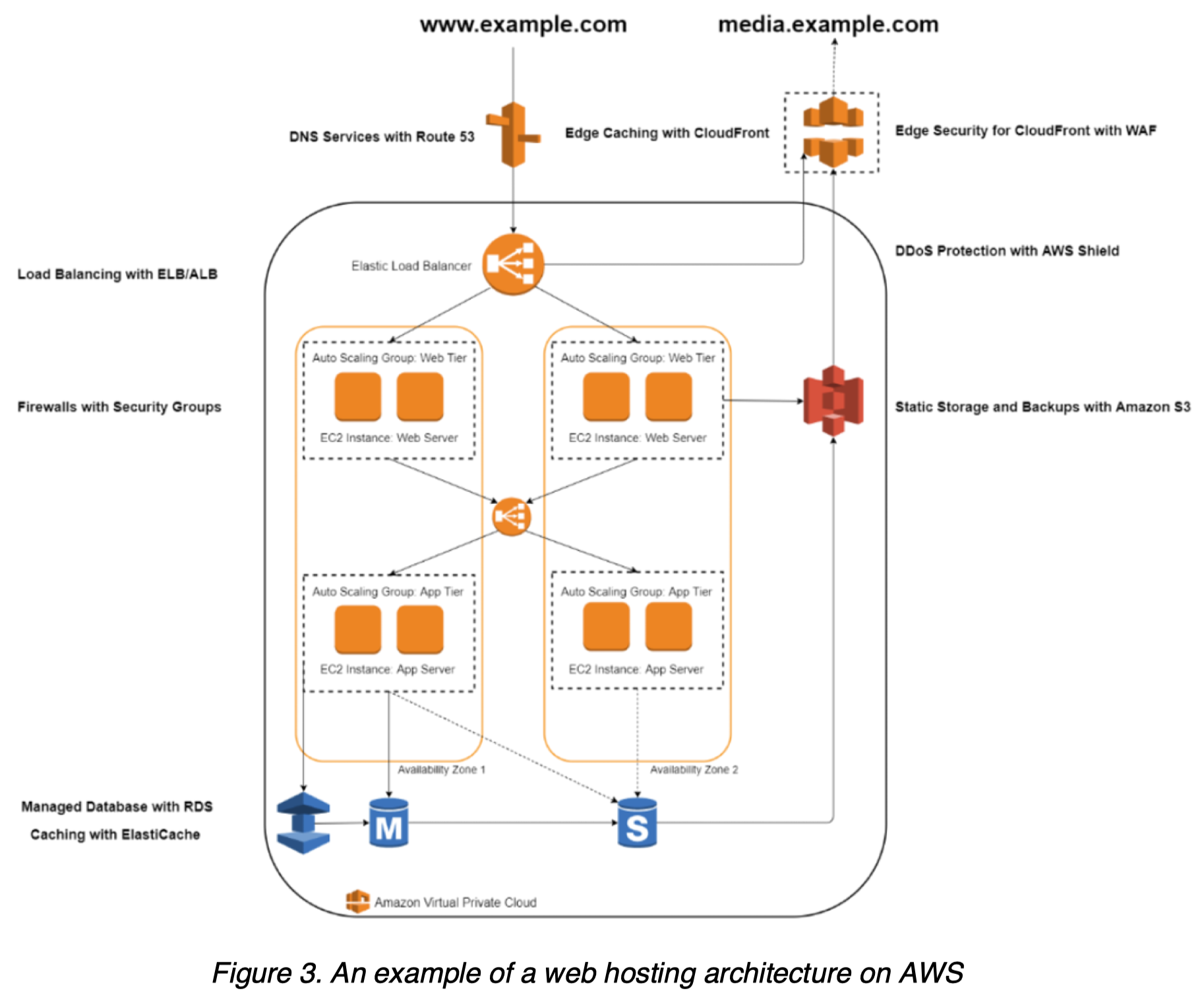

The following figure provides another look at that classic web application architecture and how it can leverage the AWS Cloud computing infrastructure.

- Load Balancing with Elastic Load Balancing (ELB)/Application Load Balancer (ALB) – Allows you to spread load across multiple Availability Zones and Amazon EC2 Auto Scaling groups for redundancy and decoupling of services.

- Firewalls with Security Groups – Moves security to the instance to provide a stateful, host-level firewall for both web and application servers.

- Caching with Amazon ElastiCache – Provides caching services with Redis or Memcached to remove load from the app and database, and lower latency for frequent requests.

- Managed Database with Amazon RDS – Creates a highly available, Multi-AZ database architecture with six possible DB engines.

- DNS Services with Amazon Route 53 – Provides DNS services to simplify domain management.

- Edge Caching with Amazon CloudFront – Edge caches high-volume content to decrease the latency to customers.

- Edge Security for Amazon CloudFront with AWS WAF – Filters malicious traffic, including XSS and SQL injection via customer-defined rules.

- DDoS Protection with AWS Shield – Safeguards your infrastructure against the most common network and transport layer DDoS attacks automatically.

- Static Storage and Backups with Amazon S3 – Enables simple HTTP-based object storage for backups and static assets like images and video.

Key Components of an AWS Web Hosting Architecture

The following sections outline some of the key components of a web hosting architecture deployed in the AWS Cloud, and explain how they differ from a traditional web hosting architecture.

Network Management

In a cloud environment such as AWS, the ability to segment your network from that of other customers enables a more secure and scalable architecture. While security groups provide host-level security (see the Host Security section), Amazon Virtual Private Cloud (Amazon VPC) allows you to launch resources in a logically isolated and virtual network that you define.

Amazon VPC is a free service that gives you full control over the details of your networking setup in AWS. Examples of this control include creating public-facing subnets for web servers, and private subnets with no internet access for your databases. Additionally, Amazon VPC enables you to create hybrid architectures by using hardware virtual private networks (VPNs), and use the AWS Cloud as an extension of your own data center.

Amazon VPC also includes IPv6 support in addition to traditional IPv4 support for your network.

Content Delivery

Edge caching is still relevant in the AWS Cloud computing infrastructure. Any existing solutions in your web application infrastructure should work just fine in the AWS Cloud. One additional option, however, is to use Amazon CloudFront for edge caching your website.

You can use CloudFront to deliver your website, including dynamic, static, and streaming content using a global network of edge locations. CloudFront automatically routes requests for your content to the nearest edge location, so content is delivered with the best possible performance. CloudFront is optimized to work with other AWS services, like Amazon Simple Storage Service 3 (Amazon S3) and Amazon Elastic Compute Cloud (Amazon EC2). CloudFront also works seamlessly with any origin server that is not an AWS origin server, which stores the original, definitive versions of your files.

Like other AWS services, there are no contracts or monthly commitments for using CloudFront – you pay only for as much or as little content as you actually deliver through the service.

Managing Public DNS

Moving a web application to the AWS Cloud requires some DNS changes to take advantage of the multiple Availability Zones that AWS provides. To help you manage DNS routing, AWS provides Amazon Route 53, 5 a highly available and scalable DNS web service. Amazon Route 53 automatically routes queries for your domain to the nearest DNS server. As a result, queries are answered with the best possible performance. Amazon Route 53 resolves requests for your domain name (for example, www.example.com) to your Classic Load Balancer, as well as your zone apex record (example.com).

Host Security

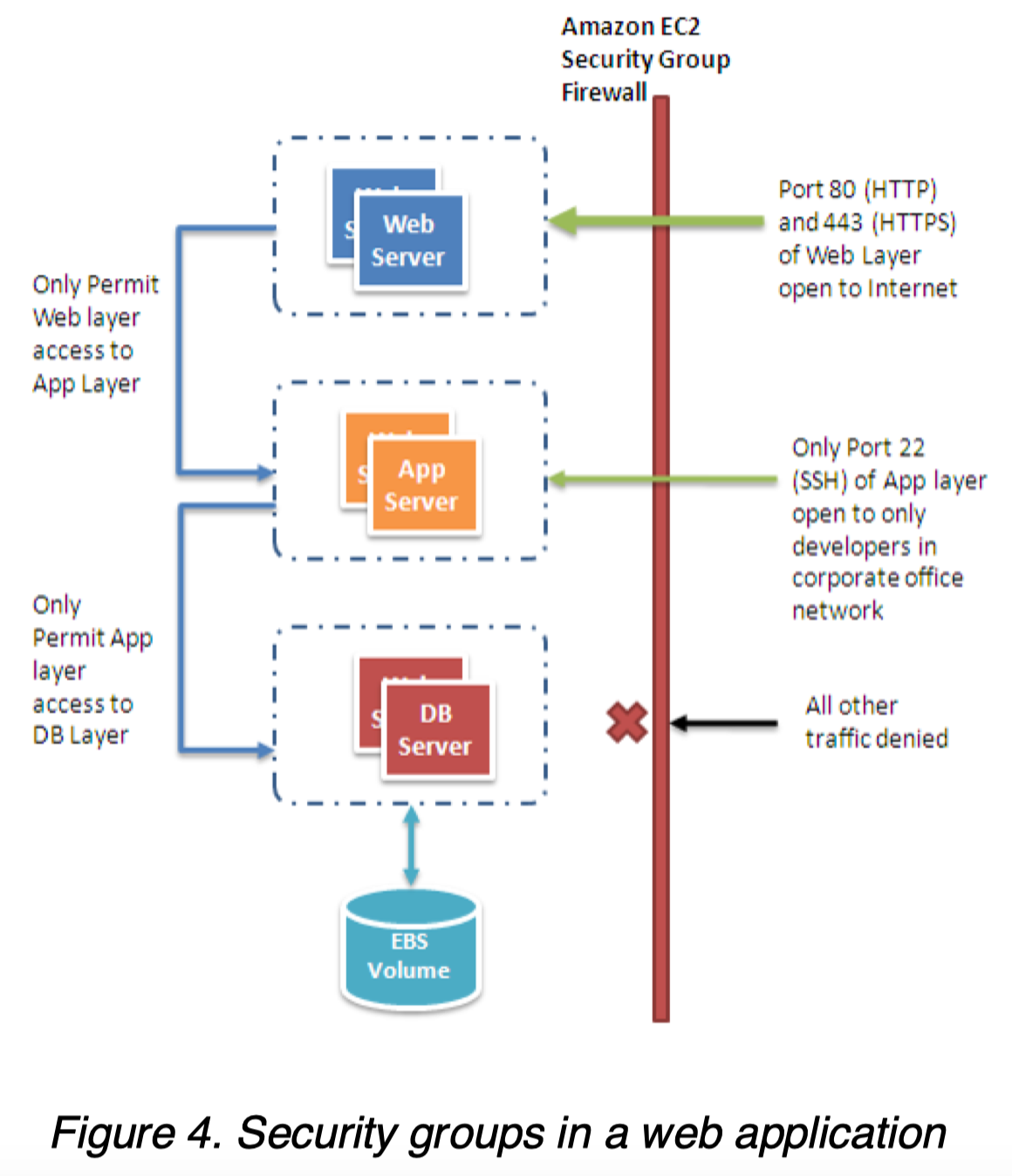

Unlike a traditional web hosting model, inbound network traffic filtering should not be confined to the edge; it should also be applied at the host level. Amazon EC2 provides a feature named security groups. A security group is analogous to an inbound network firewall, for which you can specify the protocols, ports, and source IP ranges that are allowed to reach your EC2 instances. You can assign one or more security groups to each EC2 instance. Each security group routes the appropriate traffic to each instance. Security groups can be configured so that only specific subnets or IP addresses have access to an EC2 instance. Or they can reference other security groups to limit access to EC2 instances that are in specific groups.

In the example AWS web hosting architecture in Figure 4, the security group for the web server cluster might allow access for any host only over TCP on ports 80 and 443 (HTTP and HTTPS), and from instances in the application server security group on port 22 (SSH) for direct host management. The application server security group, on the other hand, might allow access from the web server security group for handling web requests and from your organization’s subnet over TCP on port 22 (SSH) for direct host management. In this model, your support engineers could log in directly to the application servers from the corporate network and then access the other clusters from the application server boxes. For a deeper discussion on security, see the AWS Security Center. 6 The center contains security bulletins, certification information, and security whitepapers that explain the security capabilities of AWS.

Load Balancing Across Clusters

Hardware load balancers are a common network appliance used in traditional web application architectures. AWS provides this capability through the Elastic Load Balancing (ELB) service. ELB is a configurable load-balancing solution that supports health checks on hosts, distribution of traffic to EC2 instances across multiple Availability Zones, and dynamic addition and removal of Amazon EC2 hosts from the load-balancing rotation. ELB can also dynamically grow and shrink the load-balancing capacity to adjust to traffic demands, while providing a predictable entry point by using a persistent CNAME. ELB also supports sticky sessions to address more advanced routing needs. If your application requires more advanced load-balancing capabilities you can run a software load-balancing package (e.g., Zeus, HAProxy, or NGINX Plus) on EC2 instances. You can then assign Elastic IP addresses to those load-balancing EC2 instances to minimize DNS changes.

Finding Other Hosts and Services

In the traditional web hosting architecture, most of your hosts have static IP addresses. In the cloud, most of your hosts will have dynamic IP addresses. Although every EC2 instance can have both public and private DNS entries and will be addressable over the internet, the DNS entries and the IP addresses are assigned dynamically when you launch the instance. They cannot be manually assigned. Static IP addresses (Elastic IP addresses in AWS terminology) can be assigned to running instances after they are launched. You should use Elastic IP addresses for instances and services that require consistent endpoints, such as master databases, central file servers, and EC2-hosted load balancers.

Server roles that can easily scale out and in, such as web servers, should be made discoverable at their dynamic endpoints by registering their IP address with a central repository. Because most web application architectures have a database server that is always on, the database server is a common repository for discovery information. For situations where consistent addressing is needed, instances can be allocated Elastic IP addresses from a pool of addresses by a bootstrapping script when the instance is launched.

Using this model, newly added hosts can request the list of necessary endpoints for communications from the database as part of a bootstrapping phase. The location of the database can be provided as user data that is passed into each instance as it is launched. Alternatively, you can use Amazon SimpleDB to store and maintain configuration information. SimpleDB is a highly available service that is available at a well-known endpoint.

Caching within the Web Application

In-memory application caches can reduce load on services and improve performance and scalability on the database tier by caching frequently used information. Amazon ElastiCache is a web service that makes it easy to deploy, operate, and scale an inmemory cache in the cloud. You can configure the in-memory cache you create to automatically scale with load and to automatically replace failed nodes. ElastiCache is protocol-compliant with Memcached and Redis, which simplifies migration from your current on-premises solution.

Database Configuration, Backup, and Failover

Many web applications contain some form of persistence, usually in the form of a relational or NoSQL database. AWS offers both relational and NoSQL database infrastructure. Alternatively, you can deploy your own database software on an EC2 instance. The following table summarizes these options, and we discuss them in greater detail in this section.

| Relational Database Solutions | NoSQL Solutions | |

|---|---|---|

| Managed Database Service | Amazon RDS – MySQL, Oracle, SQL Server, MariaDB, PostgreSQL, Amazon Aurora | Amazon DynamoDB |

| Self-Managed | Hosting a relational DBMS on an EC2 instance | Hosting a NoSQL solution on an EC2 instance |

Amazon RDS

Amazon Relational Database Service (Amazon RDS) gives you access to the capabilities of a familiar MySQL, PostgreSQL, Oracle, and Microsoft SQL Server database engine. The code, applications, and tools that you already use can be used with Amazon RDS. Amazon RDS automatically patches the database software and backs up your database, and it stores backups for a user-defined retention period. It also supports point-in-time recovery. You benefit from the flexibility of being able to scale the compute resources or storage capacity associated with your relational database instance by making a single API call.

In addition, Amazon RDS Multi-AZ deployments increase your database availability and protect your database against unplanned outages. Amazon RDS Read Replicas provide read-only replicas of your database, so you can scale out beyond the capacity of a single database deployment for read-heavy database workloads. As with all AWS services, no upfront investments are required, and you pay only for the resources you use.

Hosting a Relational Database Management System (RDBMS) on an Amazon EC2 Instance

In addition to the managed Amazon RDS offering, you can install your choice of RDBMS (such as MySQL, Oracle, SQL Server, or DB2) on an EC2 instance and manage it yourself. AWS customers hosting a database on Amazon EC2 successfully use a variety of master/slave and replication models, including mirroring for read-only copies and log shipping for always-ready passive slaves.

When managing your own database software directly on Amazon EC2, you should also consider the availability of fault-tolerant and persistent storage. For this purpose, we recommend that databases running on Amazon EC2 use Amazon Elastic Block Store (Amazon EBS) volumes, which are similar to network-attached storage. For EC2 instances running a database, you should place all database data and logs on EBS volumes. These will remain available even if the database host fails. This configuration allows for a simple failover scenario, in which a new EC2 instance can be launched if a host fails, and the existing EBS volumes can be attached to the new instance. The database can then pick up where it left off.

EBS volumes automatically provide redundancy within the Availability Zone, which increases their availability over simple disks. If the performance of a single EBS volume is not sufficient for your databases needs, volumes can be striped to increase IOPS performance for your database. For demanding workloads you can also use EBS Provisioned IOPS, where you specify the IOPS required. If you use Amazon RDS, the service manages its own storage so you can focus on managing your data.

NoSQL Solutions

In addition to support for relational databases, AWS also offers Amazon DynamoDB, a fully managed NoSQL database service that provides fast and predictable performance with seamless scalability. Using the AWS Management Console or the DynamoDB API, you can scale capacity up or down without downtime or performance degradation. Because DynamoDB handles the administrative burdens of operating and scaling distributed databases to AWS, you don’t have to worry about hardware provisioning, setup and configuration, replication, software patching, or cluster scaling.

Amazon SimpleDB provides a lightweight, highly available, and fault-tolerant core nonrelational database service that offers querying and indexing of data without the requirement of a fixed schema. SimpleDB can be a very effective replacement for databases in data access scenarios that require one big, highly indexed and flexible schema table.

Additionally, you can use Amazon EC2 to host many other emerging technologies in the NoSQL movement, such as Cassandra, CouchDB, and MongoDB.

Storage and Backup of Data and Assets

There are numerous options within the AWS Cloud for storing, accessing, and backing up your web application data and assets. Amazon S3 provides a highly available and redundant object store. Amazon S3 is a great storage solution for somewhat static or slow-changing objects, such as images, videos, and other static media. Amazon S3 also supports edge caching and streaming of these assets by interacting with CloudFront.

For attached file system-like storage, EC2 instances can have EBS volumes attached. These act like mountable disks for running EC2 instances. Amazon EBS is great for data that needs to be accessed as block storage and that requires persistence beyond the life of the running instance, such as database partitions and application logs.

In addition to having a lifetime that is independent of the EC2 instance, you can take snapshots of EBS volumes and store them in Amazon S3. Because EBS snapshots only back up changes since the previous snapshot, more frequent snapshots can reduce snapshot times. You can also use an EBS snapshot as a baseline for replicating data across multiple EBS volumes and attaching those volumes to other running instances.

EBS volumes can be as large as 16 TB, and multiple EBS volumes can be striped for even larger volumes or for increased I/O performance. To maximize the performance of your I/O-intensive applications, you can use Provisioned IOPS volumes. Provisioned IOPS volumes are designed to meet the needs of I/O-intensive workloads, particularly database workloads that are sensitive to storage performance and consistency in random access I/O throughput. You specify an IOPS rate when you create the volume and Amazon EBS provisions that rate for the lifetime of the volume. Amazon EBS currently supports up to 20,000 IOPS per volume. You can stripe multiple volumes together to deliver thousands of IOPS per instance to your application.

Automatically Scaling the Fleet

One of the key differences between the AWS Cloud architecture and the traditional hosting model is that AWS can automatically scale the web application fleet on demand to handle changes in traffic. In the traditional hosting model, traffic forecasting models are generally used to provision hosts ahead of projected traffic. In AWS, instances can be provisioned on the fly according to a set of triggers for scaling the fleet out and back in. The Auto Scaling service can create capacity groups of servers that can grow or shrink on demand. Auto Scaling also works directly with Amazon CloudWatch for metrics data and with Elastic Load Balancing to add and remove hosts for load distribution. For example, if the web servers are reporting greater than 80 percent CPU utilization over a period of time, an additional web server could be quickly deployed and then automatically added to the load balancer for immediate inclusion in the loadbalancing rotation.

As shown in the AWS web hosting architecture model, you can create multiple Auto Scaling groups for different layers of the architecture, so that each layer can scale independently. For example, the web server Auto Scaling group might trigger scaling in and out in response to changes in network I/O, whereas the application server Auto Scaling group might scale out and in according to CPU utilization. You can set minimums and maximums to help ensure 24/7 availability and to cap to usage within a group.

Auto Scaling triggers can be set both to grow and to shrink the total fleet at a given layer to match resource utilization to actual demand. In addition to the Auto Scaling service, you can scale Amazon EC2 fleets directly through the Amazon EC2 API, which allows for launching, terminating, and inspecting instances.

Additional Security Features

The number and sophistication of Distributed Denial of Service (DDoS) attacks are rising. Traditionally, these attacks are difficult to fend off. They often end up being costly in both mitigation time and power spent, as well as the opportunity cost from lost visits to your website during the attack. There are a number of AWS factors and services that can help you defend against such attacks. The first is the scale of the AWS network. The AWS infrastructure is quite large, and we allow you to leverage our scale to optimize your defense. Several services including Elastic Load Balancing, Amazon CloudFront, and Amazon Route 53 are effective at scaling your web application in response to a large increase in traffic.

Two services in particular help with your defense strategy. AWS Shield is a managed DDoS protection service that helps safeguard against various forms of DDoS attack vectors. The standard offering of AWS Shield is free and automatically active throughout your account. This standard offering helps to defend against the most common network and transportation layer attacks. In addition to this level, the advanced offering grants higher levels of protection against your web application by providing you with near real-time visibility into an ongoing attack, as well as integrating at higher levels with the services mentioned earlier. Additionally, you get access to the AWS DDoS Response Team (DRT) to help mitigate large-scale and sophisticated attacks against your resources.

AWS WAF (web application firewall) is designed to protect your web applications from attacks that can compromise availability or security, or otherwise consume excessive resources. AWS WAF works inline with CloudFront or Application Load Balancer, along with your custom rules, to defend against attacks such as cross-site scripting, SQL injection, and DDoS. As with most AWS services, AWS WAF comes with a fully featured API that can help automate the creation and editing of rules for your WAF as your security needs change.

Failover with AWS

Another key advantage of AWS over traditional web hosting is the Availability Zones that give you easy access to redundant deployment locations. Availability Zones are physically distinct locations that are engineered to be insulated from failures in other Availability Zones. They provide inexpensive, low-latency network connectivity to other Availability Zones in the same AWS Region. As the AWS web hosting architecture diagram in Figure 3 shows, we recommend that you deploy EC2 hosts across multiple Availability Zones to make your web application more fault tolerant. It’s important to ensure that there are provisions for migrating single points of access across Availability Zones in the case of failure. For example, you should set up a database slave in a second Availability Zone so that the persistence of data remains consistent and highly available, even during an unlikely failure scenario. You can do this on Amazon EC2 or Amazon RDS with a click of a button.

While some architectural changes are often required when moving an existing web application to the AWS Cloud, there are significant improvements to scalability, reliability, and cost-effectiveness that make using the AWS Cloud well worth the effort. In the next section, we discuss those improvements.

Key Considerations When Using AWS for Web Hosting

There are some key differences between the AWS Cloud and a traditional web application hosting model. The previous section highlighted many of the key areas that you should consider when deploying a web application to the cloud. This section points out some of the key architectural shifts that you need to consider when you bring any application into the cloud.

No More Physical Network Appliances

You cannot deploy physical network appliances in AWS. For example, firewalls, routers, and load balancers for your AWS applications can no longer reside on physical devices but must be replaced with software solutions. There is a wide variety of enterprisequality software solutions, whether for load balancing (e.g., Zeus, HAProxy, NGINX Plus, and Pound) or establishing a VPN connection (e.g., OpenVPN, OpenSwan, and Vyatta). This is not a limitation of what can be run on the AWS Cloud, but it is an architectural change to your application if you use these devices today.

Firewalls Everywhere

Where you once had a simple DMZ and then open communications among your hosts in a traditional hosting model, AWS enforces a more secure model, in which every host is locked down. One of the steps in planning an AWS deployment is the analysis of traffic between hosts. This analysis will guide decisions on exactly what ports need to be opened. You can create security groups within Amazon EC2 for each type of host in your architecture. In addition, you can create a large variety of simple and tiered security models to enable the minimum access among hosts within your architecture. The use of network access control lists within Amazon VPC can help lock down your network at the subnet level.

Consider the Availability of Multiple Data Centers

Think of Availability Zones within an AWS Region as multiple data centers. EC2 instances in different Availability Zones are both logically and physically separated, and they provide an easy-to-use model for deploying your application across data centers for both high availability and reliability. Amazon VPC as a regional service allows you to leverage Availability Zones while keeping all of your resources in the same logical network.

Treat Hosts as Ephemeral and Dynamic

Probably the most important shift in how you might architect your AWS application is that Amazon EC2 hosts should be considered ephemeral and dynamic. Any application built for the AWS Cloud should not assume that a host will always be available and should be designed with the knowledge that any data that is not on an EBS volume will be lost if an EC2 instance fails. Additionally, when a new host is brought up, you shouldn’t make assumptions about the IP address or location within an Availability Zone of the host. Your configuration model must be flexible, and your approach to bootstrapping a host must take the dynamic nature of the cloud into account. These techniques are critical for building and running a highly scalable and fault-tolerant application.

Consider a Serverless Architecture

This whitepaper primarily focuses on a more traditional web architecture. However, newer services like AWS Lambda and Amazon API Gateway enable you to build a more serverless web application that abstracts away the use of virtual machines to perform compute. In these cases, code is executed on a request-by-request basis, and you pay only for the number of requests and the length of requests. You can find out more about serverless architectures here.

Conclusion

There are numerous architectural and conceptual considerations when you are contemplating migrating your web application to the AWS Cloud. The benefits of having a cost-effective, highly scalable, and fault-tolerant infrastructure that grows with your business far outstrips the efforts of migrating to the AWS Cloud.

Contributors

The following individuals and organizations contributed to this document:

- Jack Hemion, Associate Solutions Architect, AWS

- Matt Tavis, Principal Solutions Architect, AWS

- Philip Fitzsimons, Sr. Manager Well-Architected, AWS

Further Reading

- Getting started guide – AWS Web Application hosting for Linux

- Getting started guide – AWS Web Application hosting for Windows

- Getting started Video Series: Linux Web Applications in the AWS Cloud

- Getting started Video Series: .NET Web Applications in the AWS Cloud

Document Revisions

| Date | Description |

|---|---|

| September 2019 | Updated icon label for “Caching with ElastiCache” in Figure 3. |

| July 2017 | Multiple sections added and updated for new services. Updated diagrams for additional clarity and services. Addition of VPC as the standard networking method in AWS in “Network Management.” Added section on DDoS protection and mitigation in “Additional Security Features.” Added a small section on serverless architectures for web hosting. |

| September 2012 | Multiple sections updated to improve clarity. Updated diagrams to use AWS icons. Addition of “Managing Public DNS” section for detail on Amazon Route 53. “Finding Other Hosts and Services” section updated for clarity. “Database Configuration, Backup, and Failover” section updated for clarity and DynamoDB. “Storage and Backup of Data and Assets” section expanded to cover EBS Provisioned IOPS volumes. |

| May 2010 | First publication |

Notes

https://aws.amazon.com/vpc/

https://aws.amazon.com/cloudfront/

https://aws.amazon.com/s3/

https://aws.amazon.com/ec2/

https://aws.amazon.com/route53/

https://aws.amazon.com/security/

https://aws.amazon.com/elasticloadbalancing/

http://docs.aws.amazon.com/AWSEC2/latest/APIReference/Welcome.html

https://aws.amazon.com/simpledb/

https://aws.amazon.com/elasticache/

https://aws.amazon.com/rds/

https://aws.amazon.com/ebs/

https://aws.amazon.com/dynamodb/

https://aws.amazon.com/autoscaling/

https://aws.amazon.com/shield/

https://aws.amazon.com/waf/

https://aws.amazon.com/lambda/

https://aws.amazon.com/api-gateway/